Over the last year or two we have seen a lot of agent frameworks emerge like langchain, Spring AI, langchain4j, Vercel AI SDK etc. These frameworks have made it easier to build AI agents that can perform complex tasks by combining large language models (LLMs) with various tools and APIs. Recently, Google has introduced its own framework for building AI agents called the Agent Development Kit (ADK). This framework is designed to work seamlessly with Google's Gemini models and other AI capabilities, but it is also framework-compatible, model-independent, and platform-neutral. Initially it had support only for Python, but now it has been extended to Java, allowing developers to leverage the power of Google's AI capabilities in their Java applications. In this blog post, we will explore the core concepts of ADK, its architecture, and how to build a complete learning assistant agent using Java.

ADK offers a comprehensive set of features designed to address the entire agent development lifecycle:

Multi-Agent Architecture: Create modular, scalable applications where different agents handle specific tasks, working in concert to achieve complex goals.

Rich Tool Ecosystem: Access pre-built tools (Search, Code Execution etc.), create custom tools, implement Model Context Protocol (MCP) tools, or integrate third-party libraries.

Flexible Orchestration: Structured workflows using specialized workflow agents for predictable execution patterns, and dynamic LLM-driven routing for adaptive behavior.

Integrated Developer Experience: Powerful CLI and visual Web UI for local development, testing, and debugging.

Built-in Evaluation: Systematically assess agent performance, evaluating both final response quality and step-by-step execution trajectories.

Deployment Ready: Containerize and deploy agents anywhere, including integration with Google Cloud services.

Below are the core components of ADK:

Agent is a self-contained execution unit designed to act autonomously to achieve specific goals. Agents can perform tasks, interact with users, utilize external tools, and coordinate with other agents.

There are 3 main types of agents:

As your AI applications grow, structuring them as a single agent becomes limiting. ADK enables you to build robust Multi-Agent Systems (MAS) by composing multiple agents—each with specialized roles—into a collaborative, maintainable architecture.

Foundation for structuring multi-agent systems is the parent-child relationship defined in BaseAgent.

You create a tree structure by passing a list of agent instances to the sub agents argument when initializing a parent agent.

Tools allow agents to interact with the outside world, extending their capabilities beyond just conversation like web search, code execution, database queries, and more. ADK provides a rich set of built-in tools, and you can also create custom tools tailored to your specific needs.

ADK supports the below types of tools:

1. Function Tools: Custom tools you create specifically for your application's unique requirements and business logic.

2. Built-in Tools: Pre-built tools provided by ADK for common operations such as Google Search, Code Execution, and Retrieval-Augmented Generation (RAG).

3. Third-Party Tools: Seamless integration with external tool libraries and frameworks, including LangChain Tools and CrewAI Tools.

ADK Runtime is the engine of your agentic application. It's the system that takes your defined agents, tools, and callbacks and orchestrates their execution in response to user input, managing the flow of information, state changes, and interactions with external services like LLMs or storage.

The underlying language model that powers agents, enabling them to understand and generate human-like text.

As of May 2025, ADK Java supports only Gemini models and Anthropic models, but support for other models is planned in the future.

Custom code snippets that can be executed at specific points in the agent's lifecycle, allowing for checks, logging, or modifications to behavior.

Events are the basic unit of communication in ADK, representing things that happen during a session.

In this section, we will build a learning assistant agent using Google ADK with Java. This will have the below components:

1. Learning Assistant Agent - The central LLM agent built with Google ADK

2. Google Search Tool - Integrated for real-time web search capabilities

3. YouTube MCP Tool - Model Context Protocol tool for video content. This was built as part of the YouTube MCP Tool Blog. Refer that blog for more details on how to build MCP servers and clients.

4. Gemini 2.0 Flash Model - The underlying AI model powering the agent

5. ADK Dev Web UI - The development and debugging interface

Create a new Maven project and add the following dependencies to your pom.xml:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.codewiz</groupId> <artifactId>learning-assistant-agent-adk</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>23</maven.compiler.source> <maven.compiler.target>23</maven.compiler.target> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> </properties> <dependencies> <!-- The ADK Core dependency --> <dependency> <groupId>com.google.adk</groupId> <artifactId>google-adk</artifactId> <version>0.1.0</version> </dependency> <!-- The ADK Dev Web UI to debug your agent (Optional) --> <dependency> <groupId>com.google.adk</groupId> <artifactId>google-adk-dev</artifactId> <version>0.1.0</version> </dependency> </dependencies> </project>

Now, let's implement the Learning Assistant Agent step by step:

Create the agent with a name, description, and instructions that define its role as a learning assistant.

Use the Gemini 2.0 Flash model for advanced reasoning and streaming capabilities.

Add tools for real-time web search and YouTube video content retrieval using the Model Context Protocol (MCP).

Here's the complete implementation of our Learning Assistant Agent:

/** * Learning Assistant Agent built with Google ADK * Provides educational assistance, answers questions, and creates learning plans */ public class LearningAssistantAgent { // Agent configuration public static BaseAgent ROOT_AGENT = initAgent(); private static String USER_ID = "test-user"; private static String NAME = "learning-assistant"; /** * Initialize the Learning Assistant Agent with tools and configuration */ public static BaseAgent initAgent() { var toolList = getTools(); return LlmAgent.builder() .name("learning-assistant") .description("An AI assistant designed to help with learning and educational tasks.") .model("gemini-2.0-flash") .instruction(""" You are a helpful learning assistant. Your role is to assist users with educational tasks, answer questions, and provide explanations on various topics. You should: - Explain concepts clearly with examples - Guide users through problem-solving processes - Create personalized learning plans based on user goals - Provide step-by-step explanations for complex topics - Use available tools to get the most current information - Adapt your teaching style to the user's level of understanding Always be encouraging and supportive in your responses. Use the tools available to provide accurate and up-to-date information. """) .tools(toolList) .build(); } /** * Configure and return the list of tools available to the agent */ private static ArrayList<BaseTool> getTools() { String mcpServerUrl = "http://localhost:8090/sse"; SseServerParameters params = SseServerParameters.builder().url(mcpServerUrl).build(); McpToolset.McpToolsAndToolsetResult toolsAndToolsetResult = null; try { toolsAndToolsetResult = McpToolset.fromServer(params, new ObjectMapper()).get(); } catch (Exception e) { throw new RuntimeException(e); } var toolList = toolsAndToolsetResult.getTools().stream().map(mcpTool -> (BaseTool) mcpTool) .collect(Collectors.toCollection(ArrayList::new)); //toolList.add(new GoogleSearchTool()); not working (Tool use with function calling is unsupported), workaround below // Add GoogleSearch tool - Workaround for https://github.com/google/adk-python/issues/134 LlmAgent googleSearchAgent = LlmAgent.builder() .model("gemini-2.0-flash") .name("google_search_agent") .description("Search Google for current information") .instruction(""" You are a specialist in Google Search. Use the Google Search tool to find current, accurate information. Always provide sources and ensure the information is up-to-date. Summarize the key findings clearly and concisely. """) .tools(new GoogleSearchTool()) .outputKey("google_search_result") .build(); AgentTool searchTool = AgentTool.create(googleSearchAgent, false); toolList.add(searchTool); return toolList; } }

Now we need to add the below environment variables for accessing Gemini models.

export GOOGLE_GENAI_USE_VERTEXAI=FALSE export GOOGLE_API_KEY=<your-google-api-key>

You can get your Google API key from the Google Cloud Console. Make sure to enable the Gemini API for your project.

To run the agent, we need to create a main method that initializes the agent and starts a session. You can add it to the LearningAssistantAgent class or create a separate class for the main method.

Here's how you can do it:

We are using the InMemoryRunner to run the agent in memory, which is suitable for development and testing purposes. If you want to deploy the agent in production, you would typically use a different runner that integrates with your deployment environment including database.

Then we create a session for the user and start an interactive loop where the user can input questions or requests, and the agent responds using the configured tools.

/** * Main method to run the Learning Assistant Agent */ public static void main(String[] args) { InMemoryRunner runner = new InMemoryRunner(ROOT_AGENT); Session session = runner .sessionService() .createSession(NAME, USER_ID) .blockingGet(); try (Scanner scanner = new Scanner(System.in, StandardCharsets.UTF_8)) { while (true) { System.out.print("\nYou > "); String userInput = scanner.nextLine(); if ("quit".equalsIgnoreCase(userInput)) { break; } Content userMsg = Content.fromParts(Part.fromText(userInput)); Flowable<Event> events = runner.runAsync(USER_ID, session.id(), userMsg); System.out.print("\nAgent > "); events.blockingForEach(event -> System.out.println(event.stringifyContent())); } } }

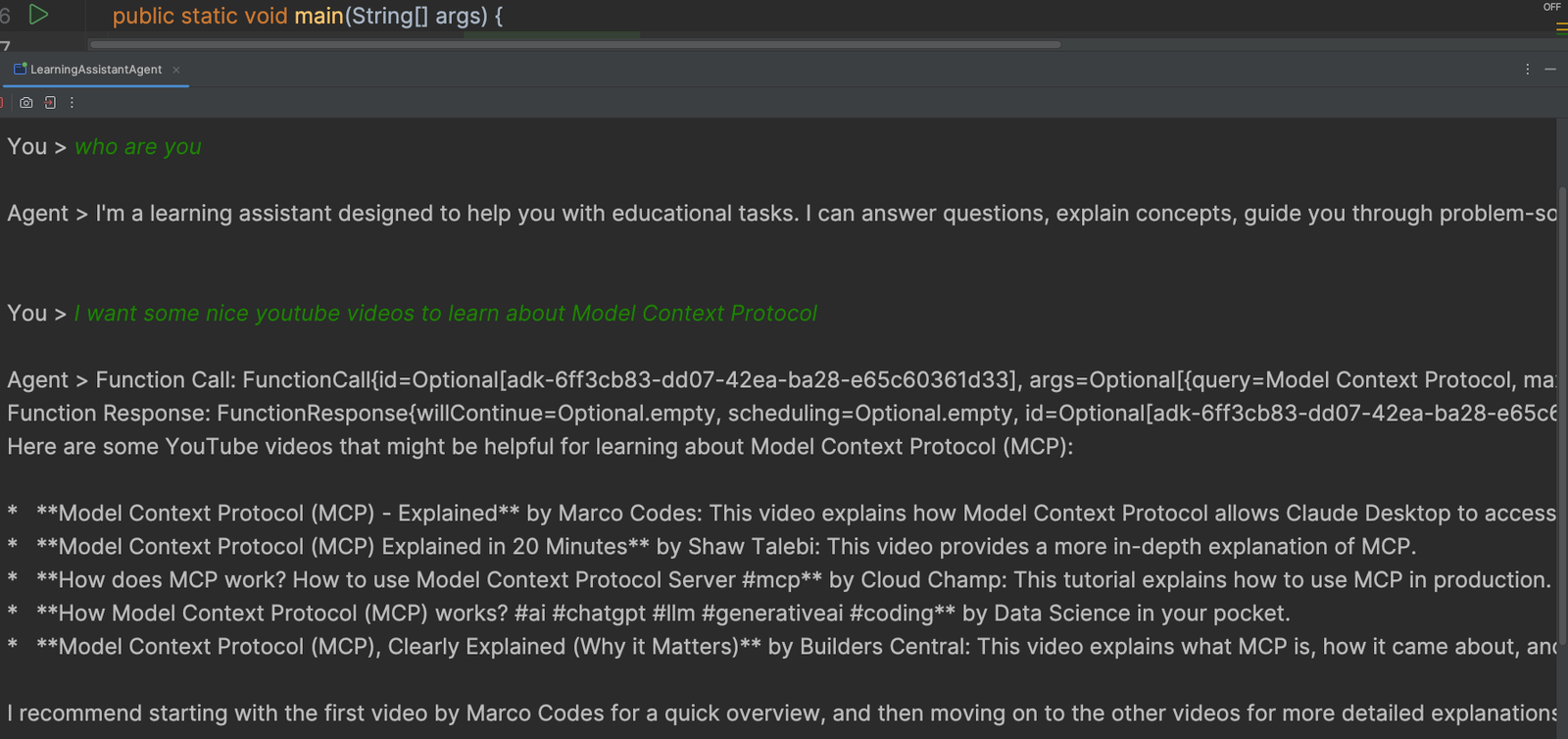

A screen capture of the agent in action is shown below:

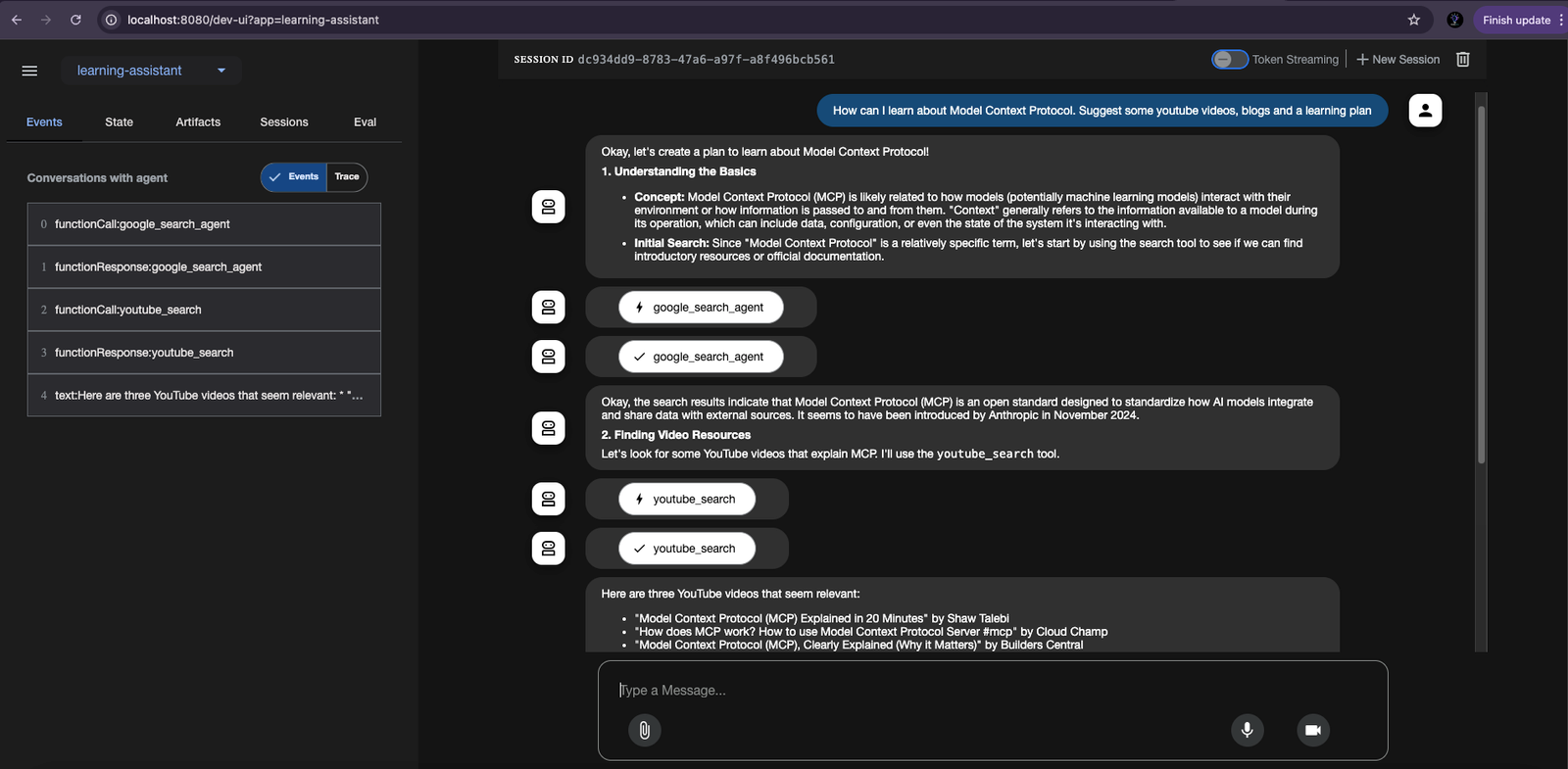

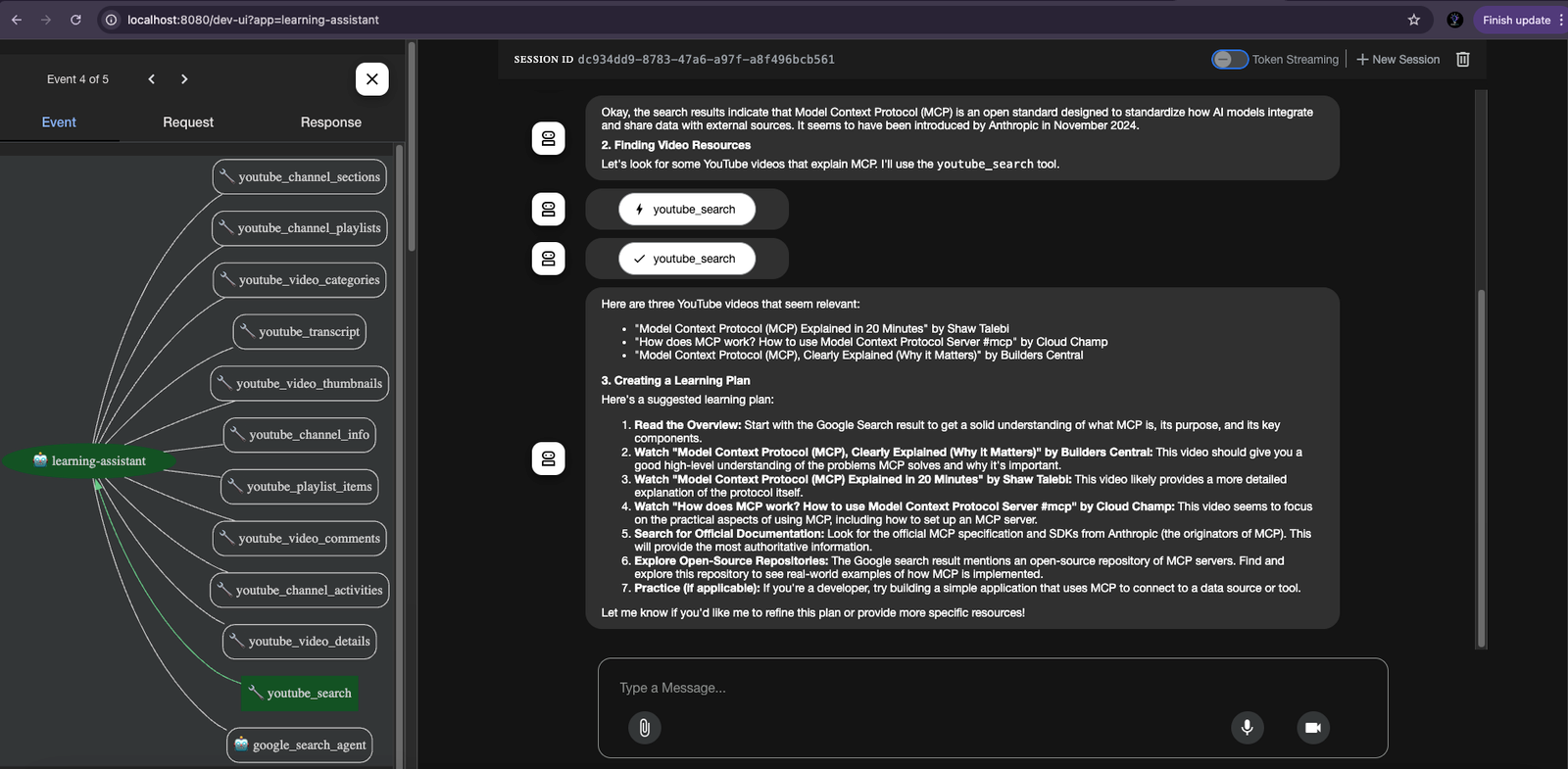

ADK provides a Web UI built using Spring for testing and debugging agents, which is very useful during development. The Web UI allows you to interact with your agents, view their responses, see various events etc.

We can start the ADK Web UI by running the below command in the terminal:

mvn exec:java \ -Dexec.mainClass="com.google.adk.web.AdkWebServer" \ -Dexec.args="--adk.agents.source-dir=src/main/java" \ -Dexec.classpathScope="compile"

!

Google's Agent Development Kit (ADK) provides a powerful, production-ready framework for building sophisticated AI agents. Although Java version is still in early stages, it offers a solid foundation for creating agents that can reason, use tools, and interact with users naturally.

You can find the source code for the Learning Assistant Agent on my GitHub repository: Learning Assistant Agent ADK.

Happy agent building! 🚀

For more in-depth tutorials on AI, Java, Spring, and modern software development practices, follow me for more content:

🔗 Blog 🔗 LinkedIn 🔗 Medium 🔗 Github

Stay tuned for more content on the latest in AI and software engineering!

Learn about Model Context Protocol (MCP) and how to build an MCP server and client using Java and Spring. Explore the evolution of AI integration and the benefits of using MCP for LLM applications.

Learn how to build an AI-powered stock portfolio advisor using Java, Spring Boot, LangChain4j, and OpenAI/Ollama. This guide walks you through integrating AI into your application to provide real-time investment advice based on the latest stock data.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.