Anyone who has worked with microservices of reasonable complexity knows that failures are inevitable. In a distributed system, where multiple services communicate over the network, failures can occur due to various reasons such as network issues, service downtime, or unexpected errors. These failures can lead to a poor user experience if not handled properly.

If these intermittent failing services are not handled properly, they can lead to a cascading failure, where one service failure causes other dependent services to fail as well. This can result in a complete system outage, leading to significant downtime and loss of revenue.

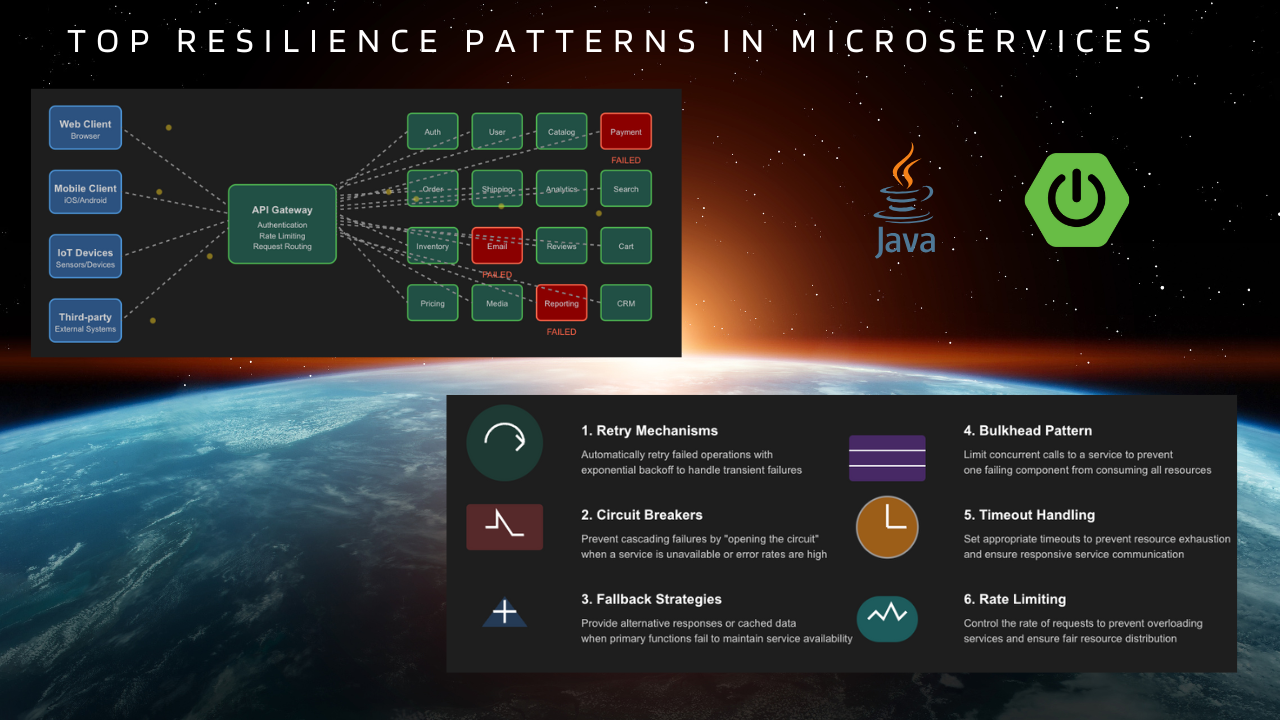

To build robust and reliable applications, we need to implement resilience patterns that allow our services to gracefully handle failures and continue functioning.

Resilience patterns are design strategies that help applications recover from failures, maintain service availability, and provide a seamless user experience.

These patterns create a robust architecture that can gracefully handle failures, maintain service availability, and minimize business impact during outages.

In this blog post, we will explore the key resilience patterns and implement them in a Trip Planner API using Spring Boot.

These patterns are more relevant while doing synchronous integrations between microservices, where one service calls another service synchronously.

We'll implement these patterns using Spring Retry and Resilience4j, demonstrating how to make your services more reliable with minimal code changes.

Before diving into the code, let's understand the main resilience patterns we'll implement:

The retry pattern involves automatically retrying a failed operation with the expectation that it might succeed on subsequent attempts. This is useful for handling transient failures such as network blips or temporary service unavailability.

In the above diagram, the client sends a request to Service A, which in turn calls Service B. If Service B fails, Service A will retry the call after a back off period. The back off period can be exponential, meaning it increases with each failure.

Named after electrical circuit breakers, this pattern "trips" when too many failures occur, temporarily preventing further calls to the failing service. This allows the failing service time to recover and prevents cascading failures throughout your system.

This diagram illustrates the three main states of a circuit breaker:

The fallback pattern provides alternative functionality when a service call fails, such as returning cached data or default values.

This diagram shows that when the primary call to an external API fails, the service can invoke a fallback method to return a default or cached response, ensuring the client still receives a meaningful reply instead of an error.

The bulkhead pattern isolates different parts of a system to prevent failures in one part from affecting others. This is similar to how a ship's bulkheads prevent flooding in one compartment from sinking the entire vessel.

In this diagram, ServiceA calls both ServiceB and ServiceC through separate bulkheads. When ServiceB fails, the failure is contained within Bulkhead 1, allowing the call to ServiceC through Bulkhead 2 to proceed normally. This compartmentalization ensures that one failing service doesn't bring down the entire system.

Key benefits of the Bulkhead pattern:

Implementation typically involves thread pools, connection pools, or semaphores to limit concurrent calls to specific services and ensure resources are properly allocated and protected.

The timeout handling pattern involves configuring a timeout for service calls and implementing logic to handle cases where the timeout is reached. This can include returning an error, returning a default response, or invoking a fallback method.

In this diagram, ServiceA calls an external API with a timeout set. If the API responds within the timeout, ServiceA returns the data to the client. If the timeout is exceeded, ServiceA returns a timeout error instead of waiting indefinitely. This pattern is essential for maintaining the responsiveness of your application, especially when dealing with slow or unreliable external services.

Rate limiting is a technique used to control the amount of incoming traffic to a service or API. It restricts the number of requests a client can make in a given time period. This is particularly important for APIs that are exposed to the public or to third-party services.

It helps prevent abuse and ensures fair usage of resources.

Normally this is done in API gateways or load balancers, but it can also be implemented at the service level.

Now that we understand these patterns, let's implement them in a Trip Planner API.

This Trip Planner API is a simple RESTful API that allows users to plan trips by fetching data from external APIs like Google Places and OpenWeatherMap.

As shown in the diagram, our TripPlanner service follows these steps:

This integration works fine under ideal conditions, but what happens when these external services experience issues? Let's explore how to make our TripPlanner service more resilient.

We will implement resilience patterns in the API call to fetch weather information.

First, we need to add the necessary dependencies to our pom.xml file:

<!-- Spring Retry for implementing retry logic --> <dependency> <groupId>org.springframework.retry</groupId> <artifactId>spring-retry</artifactId> </dependency> <!-- Required for Spring Retry --> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-aop</artifactId> </dependency> <!-- Resilience4j for circuit breaker and bulkhead patterns --> <dependency> <groupId>io.github.resilience4j</groupId> <artifactId>resilience4j-spring-boot3</artifactId> <version>2.1.0</version> </dependency>

First we need to enable Spring Retry in our application. We can do this by adding the @EnableRetry annotation to our main application class:

@SpringBootApplication @EnableRetry public class TripPlannerApiApplication { public static void main(String[] args) { SpringApplication.run(TripPlannerApiApplication.class, args); } }

Spring Retry provides an easy way to implement retry logic in Spring applications using annotations. Let's implement a retry mechanism in our WeatherService class to handle transient failures when calling the OpenWeatherMap API.

@Retryable(retryFor = Exception.class, maxAttempts = 3, backoff = @Backoff(delay = 1000,multiplier = 3)) public WeatherRecords.WeatherData getWeather(PlaceRecords.Location location, String travelDate){ log.info("Getting weather data for location: {} and date: {}", location, travelDate); String uriString = UriComponentsBuilder.fromUriString("/forecast?lat=-{lat}&lon={long}&appid={apiKey}&units=metric") .buildAndExpand(location.latitude(), location.longitude(), apiKey) .toUriString(); var weatherResponse = weatherClient.get() .uri(uriString) .retrieve() .body(WeatherRecords.WeatherResponse.class); return weatherResponse.list().stream() .filter(weatherData -> weatherData.dtTxt().startsWith(travelDate)) .findFirst() .orElseThrow(()->new NoDataFoundException("No weather data found for the date",100)); }

In this code:

@Retryable annotation specifies that the method should be retried if it fails.retryFor specifies the exception types that should trigger a retry.maxAttempts specifies the maximum number of attempts (including the initial attempt).backoff specifies the delay between retries.Above configuration will enable the retry for all exceptions. If you want to retry only for specific exceptions, you can specify specific exceptions in the retryFor attribute.

If the retry attempts fail, we can implement a fallback method using the @Recover annotation. This method will be called when all retry attempts fail.

We can return a default or cached response in the fallback method:

We can add a fall back method by adding a method with same signature as the original method but with an additional first parameter for the exception type. The @Recover annotation is used to mark this method.

@Recover public WeatherRecords.WeatherData recover(Exception e, PlaceRecords.Location location, String travelDate){ log.info("Recovering from exception for location "+location); WeatherRecords.WeatherData weatherData = new WeatherRecords.WeatherData( new WeatherRecords.Main(0, 0, 0, 0, 0), List.of(new WeatherRecords.Weather("Cached Data","","'")), new WeatherRecords.Clouds(0), new WeatherRecords.Wind(0, 0, 0), "No Data"); return weatherData; }

If you want to have different fallback methods for different exceptions, you can create multiple @Recover methods with different exception types as the first parameter.

Now let us test our end point with a failure scenario. We can do this by change the weather API url to an invalid one.

We will use httpie to test our endpoint.

http GET http://localhost:8080/trip-planner/Sydney/2025-04-17

Response will have the cached data from fall back method.

If you check the logs, you will see 3 retry attempts were made and then the fallback method was called.

WeatherService : Getting weather data for location: Location[latitude=-33.8703155, longitude=151.2088801] and date: 2025-04-17 WeatherService : Getting weather data for location: Location[latitude=-33.8703155, longitude=151.2088801] and date: 2025-04-17 WeatherService : Getting weather data for location: Location[latitude=-33.8703155, longitude=151.2088801] and date: 2025-04-17 WeatherService : Recovering from exception for location Location[latitude=-33.8703155, longitude=151.2088801]

We can add circuit breaker functionality by adding @CircuitBreaker annotation to our getWeather method. This will prevent the method from being called if the failure rate exceeds a certain threshold.

@CircuitBreaker(name="weatherService", fallbackMethod = "recoverForCircuitBreaker") public WeatherRecords.WeatherData getWeatherWithCircuitBreaker(PlaceRecords.Location location, String travelDate){ log.info(STR."Getting weather data for location: \{location} and date: \{travelDate}"); String uriString = UriComponentsBuilder.fromUriString("/forecast?lat=-{lat}&lon={long}&appid={apiKey}&units=metric") .buildAndExpand(location.latitude(), location.longitude(), apiKey) .toUriString(); var weatherResponse = weatherClient.get() .uri(uriString) .retrieve() .body(WeatherRecords.WeatherResponse.class); return weatherResponse.list().stream() .filter(weatherData -> weatherData.dtTxt().startsWith(travelDate)) .findFirst() .orElseThrow(()->new NoDataFoundException("No weather data found for the date",100)); } public WeatherRecords.WeatherData recoverForCircuitBreaker(PlaceRecords.Location location, String travelDate,Throwable t){ log.info("Recovering from exception for locaton "+location); WeatherRecords.WeatherData weatherData = new WeatherRecords.WeatherData( new WeatherRecords.Main(0, 0, 0, 0, 0), List.of(new WeatherRecords.Weather("Cached Date","","'")), new WeatherRecords.Clouds(0), new WeatherRecords.Wind(0, 0, 0), "No Data"); return weatherData; }

Now, let's add the Resilience4j circuit breaker configuration in our application.properties:

# Circuit Breaker Configuration resilience4j.circuitbreaker.instances.weatherService.failure-rate-threshold=10 resilience4j.circuitbreaker.instances.weatherService.minimum-number-of-calls=5 resilience4j.circuitbreaker.instances.weatherService.sliding-window-size=5 resilience4j.circuitbreaker.instances.weatherService.permitted-number-of-calls-in-half-open-state=1

This configuration:

fallbackMethod attribute specifies the method to call when the circuit breaker is open or when the method fails.Now let us test it by calling the API 5 times using a script

for i in {1..5}; do curl "http://localhost:8080/trip-planner/Sydney/2025-04-17" echo "Request $i completed" sleep 1 done

Response will be cached data from fall back method.

If you check the logs, you will see that the circuit breaker is opened after 5 failed attempts and the fallback method is called.

2025-04-26T22:49:00.078+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.874880000000005, longitude=151.2009] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:00.078+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.857439, longitude=151.2077747] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:00.086+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.874880000000005, longitude=151.2009] in circuit breaker 2025-04-26T22:49:00.086+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.857439, longitude=151.2077747] in circuit breaker 2025-04-26T22:49:02.002+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.874880000000005, longitude=151.2009] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:02.002+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.857439, longitude=151.2077747] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:02.004+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.857439, longitude=151.2077747] in circuit breaker 2025-04-26T22:49:02.004+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.874880000000005, longitude=151.2009] in circuit breaker 2025-04-26T22:49:03.401+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.874880000000005, longitude=151.2009] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:03.401+10:00 WeatherService : Getting weather data for location: Location[latitude=-33.857439, longitude=151.2077747] and date: 2025-04-17 in getWeatherWithCircuitBreaker 2025-04-26T22:49:03.403+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.874880000000005, longitude=151.2009] in circuit breaker 2025-04-26T22:49:03.407+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.857439, longitude=151.2077747] in circuit breaker 2025-04-26T22:49:05.013+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.857439, longitude=151.2077747] in circuit breaker 2025-04-26T22:49:05.013+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.874880000000005, longitude=151.2009] in circuit breaker 2025-04-26T22:49:06.442+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.857439, longitude=151.2077747] in circuit breaker 2025-04-26T22:49:06.443+10:00 WeatherService : Recovering from exception for location : Location[latitude=-33.874880000000005, longitude=151.2009] in circuit breaker

Here you can see after 6 failed attempts, the circuit breaker is opened and the fallback method is called. The fallback method returns a cached response instead of making further calls to the OpenWeatherMap API.

Now say you are running an expensive operation and if doesn't return in a certain time, you want to cancel the operation and return a default value. We can do this by adding @Timeout annotation to our method.

Say in our TripPlannerService class we want to pass the data from google maps and open weather API and pass to an LLM to get a summary. If it doesn't return in 1 second, we want to cancel the operation and return a default value. We can do that using @TimeLimiter annotation.

@TimeLimiter(name = "recommendationSummary", fallbackMethod = "getOverallRecommendationFallback") public CompletableFuture<String> getOverallRecommendationFromLLM(List<PlaceRecommendation> recommendationList) { return CompletableFuture.supplyAsync(() -> { try { // Simulate a long-running operation. Thread.sleep(Duration.ofSeconds(5)); } catch (InterruptedException e) { Thread.currentThread().interrupt(); } log.info("Getting overall recommendation"); return "Overall recommendation"; }); } public CompletableFuture<String> getDefaultRecommendation(List<PlaceRecommendation> recommendationList, Throwable t) { log.error("Error in getting overall recommendation: {}", t.getMessage()); return CompletableFuture.completedFuture("Default recommendation"); } ```properties # Timeout Configuration resilience4j.timelimiter.instances.recommendationSummary.timeout-duration=1000ms

The bulkhead pattern limits the number of concurrent calls to a service, preventing resource exhaustion. Let's implement it in our WeatherService class:

@Bulkhead(name = "weatherService", fallbackMethod = "getWeatherWithBulkheadFallback") public WeatherRecords.WeatherData getWeatherWithBulkhead(PlaceRecords.Location location, String travelDate){ log.info(STR."Getting weather data for location: \{location} and date: \{travelDate}"); String uriString = UriComponentsBuilder.fromUriString("/forecast?lat=-{lat}&lon={long}&appid={apiKey}&units=metric") .buildAndExpand(location.latitude(), location.longitude(), apiKey) .toUriString(); var weatherResponse = weatherClient.get() .uri(uriString) .retrieve() .body(WeatherRecords.WeatherResponse.class); return weatherResponse.list().stream() .filter(weatherData -> weatherData.dtTxt().startsWith(travelDate)) .findFirst() .orElseThrow(()->new NoDataFoundException("No weather data found for the date",100)); } public WeatherRecords.WeatherData getWeatherWithBulkheadFallback(PlaceRecords.Location location, String travelDate,Throwable t){ // Return a default value or cached data }

Now, let's add the Resilience4j bulkhead configuration in our application.properties:

# Bulkhead Configuration resilience4j.bulkhead.metrics.enabled=true resilience4j.bulkhead.instances.weatherService.max-concurrent-calls=3 resilience4j.bulkhead.instances.weatherService.max-wait-duration=1

This configuration:

fallbackMethod attribute specifies the method to call when the bulkhead is full or when the method fails.metrics.enabled property enables metrics for the bulkhead, allowing you to monitor its performance.Rate limiting is a technique to control the rate at which requests are processed. This can help prevent overloading a service and ensure fair usage among clients. We can use @RateLimiter annotation to implement rate limiting in our WeatherService class:

@RateLimiter(name = "weatherService", fallbackMethod = "getWeatherWithRateLimiterFallback") public WeatherRecords.WeatherData getWeatherWithRateLimiter(PlaceRecords.Location location, String travelDate){ // Existing implementation } public WeatherRecords.WeatherData getWeatherWithRateLimiterFallback(PlaceRecords.Location location, String travelDate,Throwable t){ // Return a default value or cached data }

Now, let's add the Resilience4j rate limiter configuration in our application.properties:

# Rate Limiter Configuration resilience4j.ratelimiter.metrics.enabled=true resilience4j.ratelimiter.instances.weatherService.limit-for-period=5 resilience4j.ratelimiter.instances.weatherService.limit-refresh-period=60s resilience4j.ratelimiter.instances.weatherService.timeout-duration=5s

This configuration:

fallbackMethod attribute specifies the method to call when the rate limit is exceeded or when the method fails.metrics.enabled property enables metrics for the rate limiter, allowing you to monitor its performance.Spring Boot Actuator provides a way to monitor the health and metrics of your application. Let's add endpoints to monitor our resilience patterns:

Add this to application.properties:

# Actuator Configuration management.endpoints.web.exposure.include=*

Now you can access these endpoints to monitor your application:

/actuator/circuitbreakers - Detailed information about circuit breakers/actuator/metrics/resilience4j.circuitbreaker.calls - Metrics on circuit breaker calls/actuator/metrics/resilience4j.ratelimiter.calls - Metrics on rate limiter calls/actuator/metrics/resilience4j.bulkhead.calls - Metrics on bulkhead calls/actuator/metrics/resilience4j.timelimiter.calls - Metrics on time limiter calls/actuator/metrics/resilience4j.retry.calls - Metrics on retry callsIn this article, we've explored various resilience patterns and implemented them in our Trip Planner API using Spring Retry and Resilience4j.

Remember that resilience is not just about implementing patterns but also about monitoring and continuous improvement. Regularly review your failure scenarios, test your resilience mechanisms, and adjust your strategies based on real-world performance.

You can find the source code here

To stay updated with the latest updates in Java and Spring follow us on linked in and medium.

This guide explores the features and usage of the RestClient introduced in Spring 6, providing a modern and fluent API for making HTTP requests. It demonstrates how to create and customize RestClient instances, make API calls, and handle responses effectively.

Learn the basics of gRPC and how to build gRPC microservices in Java and Spring Boot.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.