Proxies, reverse proxies, and load balancers are foundational components in modern web and cloud architectures. While their names are often used interchangeably, each serves a distinct purpose in routing, security, and scalability. Understanding these differences is crucial for architects, developers, and DevOps engineers designing scalable systems.

Consider this scenario: An e-commerce company needs to handle millions of requests during Black Friday sales. They might use a load balancer to distribute traffic across multiple servers, a reverse proxy for SSL termination and caching, and corporate proxies to manage employee internet access. Each component serves a specific role in creating a robust, scalable architecture.

Let us explore each technology with real-world examples and common use cases.

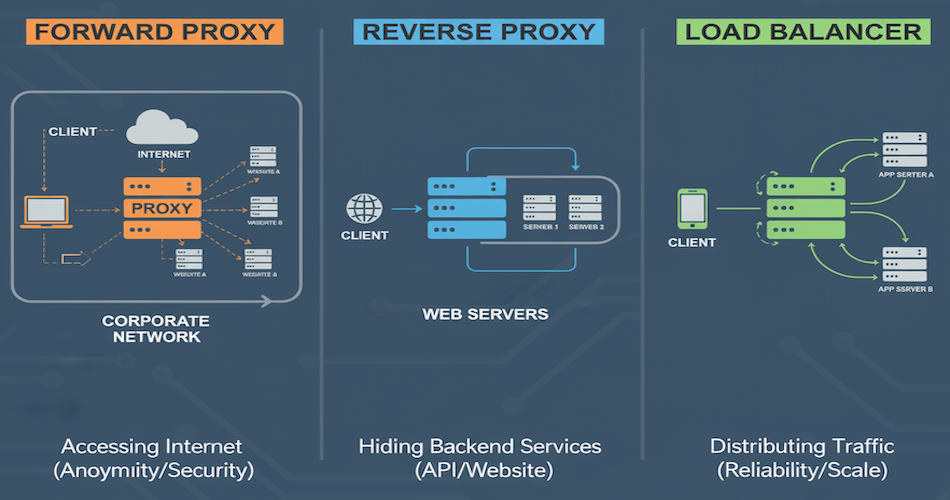

A proxy also called forward proxy acts as an intermediary between a client (like your browser) and the internet. It forwards client requests to servers, often providing anonymity, security, or access control. This is usually used to access the internet from a corporate network.

Key points:

In corporate environments, they're the gatekeepers of network security, filtering out malicious websites and monitoring employee internet usage. Many companies use them to block social media during work hours or prevent access to inappropriate content.

For families, proxies provide parental controls by blocking inappropriate websites and setting time-based restrictions for children's internet access. On the privacy front, proxies hide your IP address from destination servers.

ISPs also leverage proxies for caching frequently requested content, which reduces bandwidth usage and improves response times for their customers.

# Squid proxy configuration example http_port 3128 acl localnet src 192.168.1.0/24 http_access allow localnet http_access deny all # Block social media during work hours acl workhours time MTWHF 09:00-17:00 acl socialmedia dstdomain facebook.com twitter.com instagram.com http_access deny socialmedia workhours

A reverse proxy sits in front of one or more servers and handles requests from clients on their behalf. Clients are unaware of the backend servers, they only interact with the reverse proxy. This is usually used to hide the backend servers from the clients who access services like public API over internet.

Key points:

Reverse proxies are particularly valuable when you have multiple services behind the proxy - instead of managing SSL certificates for each service, you handle everything at the proxy level.

They also excel at load distribution, spreading incoming requests across multiple backend servers. While they're not as sophisticated as dedicated load balancers, they provide basic health checking and failover capabilities that work well for many applications.

Performance optimization is another key strength. Reverse proxies cache static content closer to clients and compress responses to reduce bandwidth usage. Tools like Varnish cache and Nginx's gzip compression are perfect examples of this capability.

From a security perspective, reverse proxies act as a protective barrier, implementing rate limiting, DDoS protection, and authentication before requests even reach your backend services. They also hide your internal network topology, which is a significant security benefit.

Many reverse proxies also handle content optimization automatically, resizing images, minifying CSS and JavaScript files, and delivering browser-specific content to improve user experience.

# Nginx reverse proxy configuration server { listen 80; server_name api.example.com; # SSL termination location / { proxy_pass http://backend_servers; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; } # Rate limiting limit_req_zone $binary_remote_addr zone=api:10m rate=10r/s; limit_req zone=api burst=20 nodelay; # Caching for static content location ~* \.(jpg|jpeg|png|gif|css|js)$ { expires 1y; add_header Cache-Control "public, immutable"; } } upstream backend_servers { server 192.168.1.10:8080; server 192.168.1.11:8080; server 192.168.1.12:8080; }

A load balancer distributes incoming client requests across multiple backend servers to ensure no single server is overwhelmed, improving reliability and scalability.

Key points:

Load balancers continuously monitor backend servers:

# HAProxy health check configuration backend web_servers balance roundrobin option httpchk GET /health server web1 192.168.1.10:8080 check inter 5s rise 2 fall 3 server web2 192.168.1.11:8080 check inter 5s rise 2 fall 3 server web3 192.168.1.12:8080 check inter 5s rise 2 fall 3

| Type | How It Works | Typical Use/Examples |

|---|---|---|

| Layer 4 Load Balancer |

Operates at TCP/UDP level Extremely fast, does not inspect application data | AWS Network Load Balancer, HAProxy (TCP mode) |

| Layer 7 Load Balancer |

Works at HTTP/HTTPS (application) layer Can route by URL path, headers, or content Enables intelligent routing | AWS Application Load Balancer, Nginx, HAProxy (HTTP mode) |

| Global Server Load Balancing (GSLB) |

Distributes traffic across multiple data centers Provides geographic load balancing and disaster recovery (often DNS-based) | AWS Route 53, CloudFlare |

Hardware Load Balancers:

Software Load Balancers:

Cloud Load Balancers:

| Proxy | Reverse Proxy | Load Balancer |

|---|---|---|

|

|

|

| Feature | Proxy | Reverse Proxy | Load Balancer |

|---|---|---|---|

| Main Use | Anonymity, Filtering, Access Control | Security, SSL, Caching | Scalability, Reliability |

| Request Routing | To Internet | To Internal Servers | To Multiple Servers |

| Layer | Application | Application | Transport/Application |

| Session Affinity | Not Required | Configurable | Configurable |

| Health Checks | Not Common | Basic | Advanced |

| SSL Termination | No | Yes | Yes |

| Caching | Yes | Yes | Limited |

| Rate Limiting | Yes | Yes | Yes |

Understanding the differences between proxies, reverse proxies, and load balancers is crucial for designing secure, scalable, and robust web architectures. Each technology serves specific purposes:

Yes, many reverse proxies like Nginx and HAProxy can perform basic load balancing functions. However, dedicated load balancers offer more advanced features like sophisticated health checks, session persistence, and complex routing algorithms.

Layer 4 load balancers work at the transport layer (TCP/UDP) and route traffic based on IP addresses and ports. Layer 7 load balancers work at the application layer (HTTP/HTTPS) and can make routing decisions based on URL paths, headers, and content.

It depends on your requirements. For simple applications, a reverse proxy with basic load balancing capabilities might be sufficient. For high-traffic applications, you might use both: a reverse proxy for SSL termination and caching, and a dedicated load balancer for advanced traffic distribution.

Forward proxies can add latency due to the extra hop, but they often improve performance through caching. Reverse proxies typically improve performance by caching static content and compressing responses. Load balancers can improve performance by distributing traffic and preventing server overload.

Yes, each technology has security considerations. Forward proxies can be bypassed and may log sensitive data. Reverse proxies can become single points of failure. Load balancers can be targeted for DDoS attacks. Proper configuration, regular updates, and monitoring are essential for security.

For more in-depth tutorials on Java, Spring, and modern software development, check out my content:

🔗 Blog: https://codewiz.info

🔗 LinkedIn: https://www.linkedin.com/in/code-wiz-740370302/

🔗 Medium: https://medium.com/@code.wizzard01

🔗 Github: https://github.com/CodeWizzard01

Complete guide to HTTP protocol evolution. Compare HTTP/0.9, HTTP/1.0, HTTP/1.1, HTTP/2, and HTTP/3 with improvements and use cases.

A comprehensive guide to optimizing your APIs using techniques like caching, pagination, asynchronous processing, and more, with practical Java Spring examples.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.