Processes and Threads are fundamental concepts in computer science that enable modern applications to run efficiently and concurrently. They allow software to perform multiple tasks simultaneously, improving performance and responsiveness.

In simple terms, a process is like an independent program running on your computer (think of your web browser or a game). A thread, on the other hand, is like a mini-worker within that program, helping it do several things simultaneously (like loading images while you can still scroll a webpage).

Understanding processes and threads is super important if you're curious about how software really works, or if you're learning to program. They are the building blocks that operating systems use to manage tasks and allow applications to perform multiple operations concurrently, making your software fast and responsive.

A process is an instance of a computer program that is being executed. When you run a program you coded or installed on your computer, the operating system creates a process for it. Each process has its own memory space, which means it operates independently of other processes. This isolation helps prevent one process from interfering with another, enhancing stability and security.

The Operating System (OS) manages processes using a data structure called the Process Control Block (PCB), also known as a Task Control Block. The PCB is vital for context switching and stores all the essential information about a process. Key information stored in a PCB includes:

Process States

As a process executes, it changes state. The state of a process is defined in part by the current activity of that process. Common process states include:

These states transition in a lifecycle: A new process moves to Ready. When the OS scheduler dispatches it, it moves to Running. From Running, it might be moved back to Ready (e.g., if its time slice expires or a higher priority process comes in), or to Waiting (if it needs an I/O operation). A waiting process moves to Ready once the event it was waiting for occurs. Eventually, a running process will complete and move to Terminated.

Process Memory Layout

A process has its own virtual memory space, which is isolated from other processes. This memory space is typically organized into several segments:

malloc, new). The heap grows and shrinks as memory is allocated and deallocated by the program.A process has its own memory space, which means that it has its own copy of the program code, data, and other resources. This memory space is isolated from other processes, so one process cannot directly access the memory of another process.

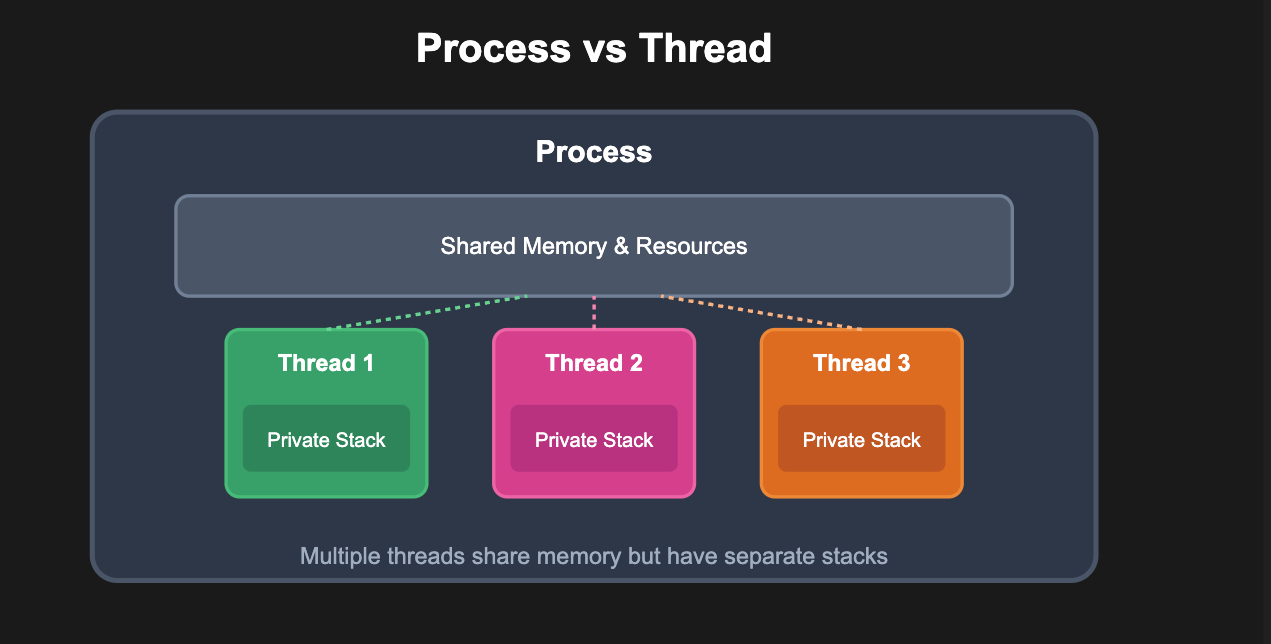

A thread is the smallest unit of execution within a process, often referred to as a lightweight process. It represents a single path of execution within its parent process. A process can have multiple threads, all executing concurrently and cooperating to perform the overall task of the process.

A process needs at least one thread to execute, but it can have many threads running simultaneously.

Threads within the same process share many of the process's resources, including:

However, each thread has its own distinct set of resources to allow independent execution:

Benefits of Using MultiThreads:

Using threads offers several significant advantages:

Threads are lightweight compared to processes, as they do not require a separate memory space for the shared resources. This makes them faster to create and manage.

| Feature | Process | Thread |

|---|---|---|

| Memory Space | Each process has its own isolated memory space | Threads share the memory space of the parent process |

| Isolation | Processes are completely isolated from each other | Threads within a process are not isolated from each other |

| Creation Time | Longer to create (requires OS resource allocation) | Shorter to create (lightweight, shares existing resources) |

| Context Switching | Higher overhead (must save/restore entire process state) | Lower overhead (only registers and stack pointer) |

| Communication | Slower - requires IPC mechanisms (pipes, sockets, shared memory) | Faster - direct access to shared memory and variables |

| Resource Consumption | Higher memory and CPU overhead | Lower memory and CPU overhead |

| Fault Tolerance | If one process crashes, others remain unaffected | If one thread crashes, entire process may terminate |

| Concurrency Model | Achieves concurrency through multiple independent processes | Achieves concurrency through multiple threads in same process |

| Scalability | Limited by system resources and IPC overhead | Better scalability due to shared resources |

| Security | Better security due to memory isolation | Shared memory can lead to security vulnerabilities |

| Debugging | Easier to debug due to isolation | More complex debugging due to shared state |

Multithreading is the ability of an operating system (or an application) to manage multiple threads of execution within a single process. These threads can run concurrently, meaning they appear to run at the same time. On a single-core processor, this concurrency is achieved through time-slicing (rapidly switching between threads). On a multi-core processor, threads can run truly in parallel, with different threads executing on different cores simultaneously.

Multithreading offers several key benefits:

While powerful, multithreading introduces complexities and potential pitfalls:

A race condition occurs when the behavior of a software system depends on the unpredictable sequence or timing of operations by multiple threads. If multiple threads access and manipulate shared data concurrently, and at least one of the accesses is a write, the outcome can be non-deterministic and lead to erroneous results. The "race" is to see which thread accesses/modifies the data last.

Example: Simple Counter (Java)

Consider a shared counter that multiple threads try to increment:

class Counter { private int count = 0; // Unsynchronized method - potential race condition! public void increment() { int temp = count; // Read temp = temp + 1; // Modify count = temp; // Write } public int getCount() { return count; } } class WorkerThread extends Thread { private Counter counter; public WorkerThread(Counter counter) { this.counter = counter; } @Override public void run() { for (int i = 0; i < 1000; i++) { counter.increment(); } } } public class RaceConditionDemo { public static void main(String[] args) throws InterruptedException { Counter sharedCounter = new Counter(); WorkerThread t1 = new WorkerThread(sharedCounter); WorkerThread t2 = new WorkerThread(sharedCounter); t1.start(); t2.start(); t1.join(); // Wait for t1 to finish t2.join(); // Wait for t2 to finish // Expected count: 2000, Actual count: often less due to race condition System.out.println("Final count: " + sharedCounter.getCount()); } }

In the increment() method, the read-modify-write sequence (int temp = count; temp = temp + 1; count = temp;) is not atomic. If Thread A reads count, then Thread B reads count (getting the same value) before Thread A writes its updated value, both threads might write the same incremented value, leading to a lost update.

A deadlock is a state in which two or more threads are blocked forever, each waiting for the other to release a resource that it holds. This typically occurs when threads attempt to acquire multiple locks in different orders.

The Coffman conditions describe four necessary conditions for a deadlock to occur:

Because threads share memory, it's crucial to control access to shared data to prevent issues like race conditions, deadlocks, and data corruption. This control mechanism is called synchronization. Synchronization ensures that only one thread can access a critical section (a piece of code that accesses shared resources) at any given time, or that operations on shared data are performed in a coordinated manner.

Operating systems and programming languages provide various synchronization primitives to help manage concurrent access:

synchronized keyword and wait()/notify() methods are based on the monitor concept.These primitives are essential tools for writing correct and robust multithreaded applications.

Java provides built-in support for multithreading. Here are two common ways to create threads:

Thread ClassYou can create a new class that extends java.lang.Thread and override its run() method.

class MyThread extends Thread { private String threadName; public MyThread(String name) { this.threadName = name; System.out.println("Creating " + threadName ); } @Override public void run() { System.out.println("Running " + threadName ); try { for(int i = 4; i > 0; i--) { System.out.println("Thread: " + threadName + ", " + i); // Let the thread sleep for a while. Thread.sleep(50); } } catch (InterruptedException e) { System.out.println("Thread " + threadName + " interrupted."); } System.out.println("Thread " + threadName + " exiting."); } } public class ThreadExample { public static void main(String args[]) { MyThread thread1 = new MyThread("Thread-1"); MyThread thread2 = new MyThread("Thread-2"); thread1.start(); // Calls run() method thread2.start(); // Calls run() method } }

Runnable InterfaceA more flexible approach is to implement the java.lang.Runnable interface. This is often preferred because Java does not support multiple inheritance of classes, so if your class already extends another class, it cannot extend Thread.

class MyRunnable implements Runnable { private String threadName; public MyRunnable(String name) { this.threadName = name; System.out.println("Creating " + threadName ); } @Override public void run() { System.out.println("Running " + threadName ); try { for(int i = 4; i > 0; i--) { System.out.println("Thread: " + threadName + ", " + i); Thread.sleep(50); } } catch (InterruptedException e) { System.out.println("Thread " + threadName + " interrupted."); } System.out.println("Thread " + threadName + " exiting."); } } public class RunnableExample { public static void main(String args[]) { MyRunnable runnable1 = new MyRunnable("Thread-A"); MyRunnable runnable2 = new MyRunnable("Thread-B"); Thread thread1 = new Thread(runnable1); Thread thread2 = new Thread(runnable2); thread1.start(); thread2.start(); // Example of join(): wait for threads to finish try { thread1.join(); thread2.join(); } catch (InterruptedException e) { System.out.println("Main thread interrupted."); } System.out.println("Main thread exiting."); } }

To start a thread created via Runnable, you create a Thread object, pass the Runnable instance to its constructor, and then call start() on the Thread object. The join() method can be used to make the current thread wait until the specified thread completes its execution.

Now let's see how we can observe some of these concepts with a running Java application.

First, let's create a simple Java program named SimpleJavaProcess.java that creates a couple of worker threads:

// SimpleJavaProcess.java class SimpleWorker implements Runnable { private String name; private int iterations; private long sleepMillis; public SimpleWorker(String name, int iterations, long sleepMillis) { this.name = name; this.iterations = iterations; this.sleepMillis = sleepMillis; } @Override public void run() { System.out.println("Thread: " + name + " starting."); try { for (int i = 0; i < iterations; i++) { System.out.println("Thread: " + name + " - iteration " + (i + 1) + "/" + iterations); Thread.sleep(sleepMillis); } } catch (InterruptedException e) { System.out.println("Thread: " + name + " interrupted."); Thread.currentThread().interrupt(); // Preserve interrupt status } System.out.println("Thread: " + name + " finishing."); } } public class SimpleJavaProcess { public static void main(String[] args) { System.out.println("Main thread starting."); // Worker threads will run for 6 iterations * 5 seconds/iteration = 30 seconds Thread worker1 = new Thread(new SimpleWorker("Worker-1", 6, 5000)); Thread worker2 = new Thread(new SimpleWorker("Worker-2", 6, 5000)); worker1.start(); worker2.start(); System.out.println("Main thread waiting for worker threads to complete..."); try { worker1.join(); // Wait for worker1 to finish worker2.join(); // Wait for worker2 to finish } catch (InterruptedException e) { System.out.println("Main thread interrupted while waiting for worker threads."); Thread.currentThread().interrupt(); // Preserve interrupt status } System.out.println("Main thread finishing."); } }

To try this out, save the code above into a file named SimpleJavaProcess.java. Then, open your terminal or command prompt and compile it:

javac SimpleJavaProcess.java

Once it compiles successfully, you can run it:

java SimpleJavaProcess

You should see output from the main thread and the two worker threads, progressing over about 30 seconds.

While the SimpleJavaProcess program is running (it will run for about 30 seconds), open another terminal window to try these commands:

Finding the Process ID (PID):

First, you'll need the Process ID (PID) of your running SimpleJavaProcess.

Using jps (Java Virtual Machine Process Status Tool - Recommended):

This tool is part of the JDK and specifically lists Java processes.

jps -l

This command lists the PID and the full main class name or JAR file name, making it easy to identify your SimpleJavaProcess.

On Linux/macOS:

If jps isn't available or you prefer a general OS tool:

ps aux | grep SimpleJavaProcess

Look for the line corresponding to your java SimpleJavaProcess command. The PID is usually the second column.

On Windows (Task Manager):

java.exe (or javaw.exe). The "Command line" column (if added) can help you confirm it's running SimpleJavaProcess.~/Projects/codewiz/java-thread-examples jps 57691 Launcher 19676 Main 57692 SimpleJavaProcess

Once you have the PID, replace <PID> in the commands below with the actual ID.

Using ps:

The ps command can display threads associated with a process.

ps -M <PID>

Using top:

The top command provides a dynamic real-time view of running processes and can also show threads.

top -H -p <PID>

The -H option toggles thread visibility. This will show you the resource usage (CPU, memory) per thread.

You'll notice the main thread, your "Worker-1" and "Worker-2" threads, and also several other threads managed by the JVM itself (e.g., Garbage Collection threads like "GC Thread", JIT (Just-In-Time) compiler threads like "C2 CompilerThread", signal dispatchers, etc.).

jstack (Cross-Platform)The jstack utility (part of the JDK) is excellent for getting a "thread dump" of a Java process. This shows the stack trace for each thread, which tells you what each thread is doing at that moment, including its state (e.g., RUNNABLE, TIMED_WAITING for Thread.sleep(), WAITING, BLOCKED).

jstack <PID>

Run this command while SimpleJavaProcess is active. In the output, you should be able to identify:

join()).TIMED_WAITING state due to Thread.sleep()).jstack is particularly useful for diagnosing issues like application hangs or deadlocks, as it shows exactly where each thread is stuck.

These tools give you a glimpse into how the OS and JVM manage the threads you create and the ones they use internally.

~/Projects/codewiz/java-streams-examples jstack 57692 "main" #3 [10243] prio=5 os_prio=31 cpu=48.34ms elapsed=18.34s tid=0x0000000131809200 nid=10243 in Object.wait() [0x000000016dc46000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait0(java.base@24/Native Method) - waiting on <0x00000006f1fb98a0> (a java.lang.Thread) at java.lang.Object.wait(java.base@24/Object.java:389) at java.lang.Thread.join(java.base@24/Thread.java:1860) - locked <0x00000006f1fb98a0> (a java.lang.Thread) at java.lang.Thread.join(java.base@24/Thread.java:1936) at com.codewiz.examples.SimpleJavaProcess.main(SimpleJavaProcess.java:44) "Thread-0" #26 [43267] prio=5 os_prio=31 cpu=6.03ms elapsed=18.29s tid=0x0000000132009000 nid=43267 waiting on condition [0x0000000170236000] java.lang.Thread.State: TIMED_WAITING (sleeping) at java.lang.Thread.sleepNanos0(java.base@24/Native Method) at java.lang.Thread.sleepNanos(java.base@24/Thread.java:482) at java.lang.Thread.sleep(java.base@24/Thread.java:513) at com.codewiz.examples.SimpleWorker.run(SimpleJavaProcess.java:21) at java.lang.Thread.runWith(java.base@24/Thread.java:1460) at java.lang.Thread.run(java.base@24/Thread.java:1447) "Thread-1" #27 [43011] prio=5 os_prio=31 cpu=5.77ms elapsed=18.29s tid=0x0000000125012400 nid=43011 waiting on condition [0x0000000170442000] java.lang.Thread.State: TIMED_WAITING (sleeping) at java.lang.Thread.sleepNanos0(java.base@24/Native Method) at java.lang.Thread.sleepNanos(java.base@24/Thread.java:482) at java.lang.Thread.sleep(java.base@24/Thread.java:513) at com.codewiz.examples.SimpleWorker.run(SimpleJavaProcess.java:21) at java.lang.Thread.runWith(java.base@24/Thread.java:1460) at java.lang.Thread.run(java.base@24/Thread.java:1447) "C2 CompilerThread0" #19 [26627] daemon prio=9 os_prio=31 cpu=17.73ms elapsed=18.33s tid=0x000000013200ae00 nid=26627 waiting on condition [0x0000000000000000] java.lang.Thread.State: RUNNABLE No compile task "C1 CompilerThread0" #22 [27139] daemon prio=9 os_prio=31 cpu=31.26ms elapsed=18.33s tid=0x000000013183b200 nid=27139 waiting on condition [0x0000000000000000] java.lang.Thread.State: RUNNABLE No compile task ... (other threads)

Understanding processes, threads, and multithreading is fundamental to grasping how modern software and operating systems work their magic. These concepts are pivotal for anyone looking to develop efficient, robust, and responsive applications, whether you're building a complex server-side system, a snappy desktop application, or a smooth mobile app.

Keep exploring, keep learning, and happy coding!

I'm passionate about sharing knowledge on modern software development practices, Java, Spring, and system design. If you found this guide helpful, I'd love to connect and share more insights with you!

🔗 Blog - Deep dive into more tutorials and guides

🔗 LinkedIn - Industry insights

🔗 Medium - Technical articles and thought pieces

🔗 GitHub - Projects and code examples

Follow for regular updates on Java, Spring Framework, microservices, system design, and the latest in software engineering! 🚀

A comprehensive guide to JVM memory covering memory layout, common issues, and diagnostic tools. Learn how to analyze GC logs, heap dumps for effective JVM memory management.

Learn parallel task execution in Java and Spring Boot using CompletableFuture, @Async, Virtual Threads, and Structured Concurrency for better performance.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.