In this guide, we will cover the basics of Docker, a powerful tool for developing, shipping, and running applications. If you've ever heard someone say, 'It works on my machine,' then you're already familiar with one of the biggest problems Docker aims to solve. Picture this: you're working on a project, and everything is running smoothly on your local machine. But when you try to deploy it to a server or share it with a colleague, things start to break. This could be due to differences in operating system, libraries, or dependencies. Docker provides a way to package applications with their dependencies into containers, which can be easily shipped and run on any platform that supports Docker without worrying about compatibility issues.

Related: Check out our Docker Cheat Sheet for quick reference commands and Spring Boot Integration Testing for containerized testing strategies.

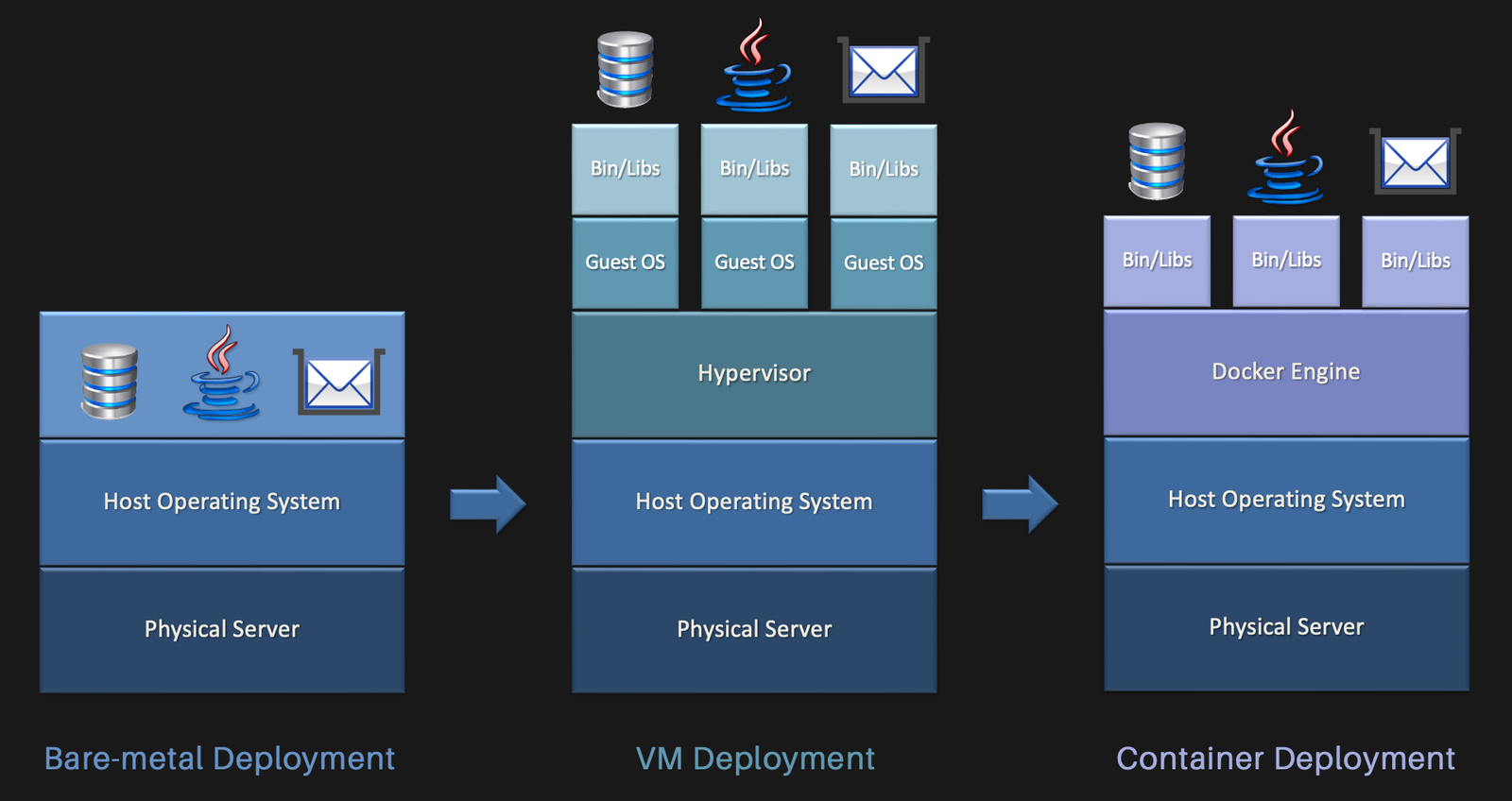

First we had physical servers or bare metal machines with a single operating system installed directly on the hardware. While this setup was powerful, it came with significant drawbacks. First, bare metal servers were resource-heavy. Each application needed its own dedicated server, leading to underutilized resources and high costs. Additionally, setting up and managing these servers was complex and time-consuming. If you wanted to run multiple applications, you had to carefully manage dependencies and configurations to avoid conflicts.

Next came virtual machines (VMs), which brought a major improvement. VMs allow us to run multiple isolated operating systems on a single physical server using a hypervisor. Each VM includes its own operating system and application, which solves the dependency conflicts and isolation issues. However, VMs are still relatively heavy. They consume a lot of resources because each VM includes a full operating system, even if it's just for running a small application.

Docker takes the concept of virtualization to the next level with containers.

Unlike VMs, containers share the host operating system's kernel, which makes them much more lightweight and efficient. You can run many more containers on a single host compared to VMs.

At the heart of Docker's efficiency and isolation are two key features of the Linux kernel:

By combining namespaces and cgroups, Docker creates lightweight, isolated environments for each application, all running on the same operating system. This allows you to run multiple applications securely and efficiently without the overhead of traditional virtual machines.

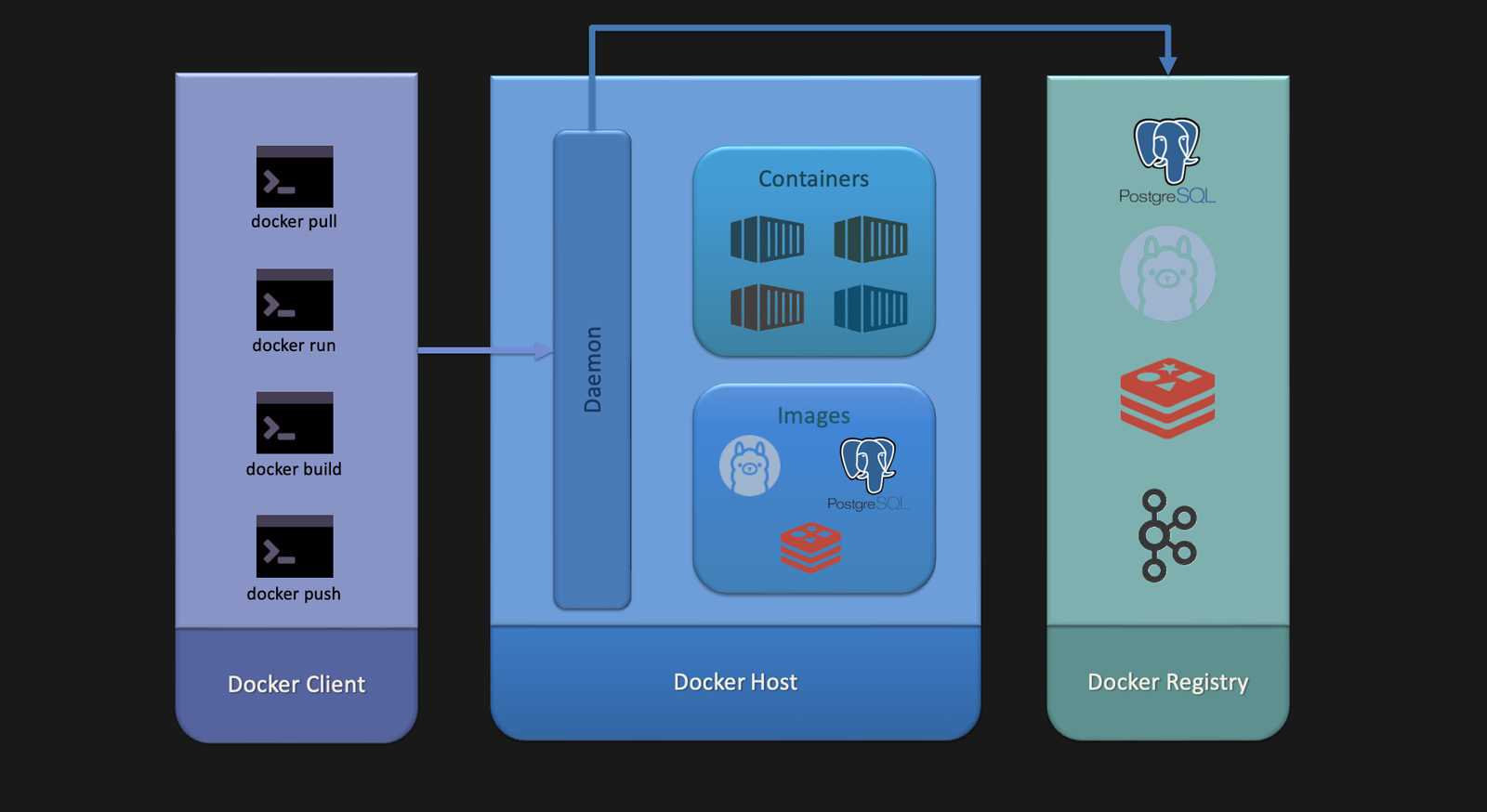

Docker follows a client-server architecture. The Docker client communicates with the Docker daemon, which does the heavy lifting of building, running, and distributing Docker containers. The Docker client and daemon can run on the same system or connect remotely.

Here's a high-level overview of the Docker architecture:

Docker Client: The primary way users interact with Docker. It sends commands to the Docker daemon, which then executes

Docker Daemon: The Docker daemon (dockerd) is a background service that manages Docker objects like images, containers, networks, and volumes. It listens for Docker API requests and handles container lifecycle operations.

Images: Read-only templates used to create containers. An image includes everything needed to run an application—code, runtime, libraries, environment variables, and configuration files. Think of it as a snapshot of your application and its dependencies at a specific point in time.

Containers: Runnable instances of Docker images. A container includes the application and all its dependencies, isolated from the host system. You can start, stop, move, or delete containers using Docker commands.

Docker Registry: A repository for Docker images. You can think of it as a public library where you can find and share images. Docker Hub is the default registry, but you can also use private registries or set up your own.

Docker CLI: Command-line interface for Docker. It allows users to interact with Docker using commands like docker run, docker build, docker pull, and more.

To get started with Docker, you need to install the Docker Engine on your system. Docker provides installation packages for various operating systems, including Windows, macOS, and Linux. You can find detailed installation instructions on the official Docker website.

Once you have Docker installed, you can pull/download an image and run a container. Let's start with a simple example using the official Nginx image.

docker pull nginx

Now to see the list of images you have downloaded, you can run:

docker images

Now, let's run a container using the nginx image.

docker run -d -p 8080:80 --name mynginx nginx

To see the running containers, you can use:

docker ps

Now you can access the Nginx server running in the container by visiting http://localhost:8080 in your browser.

To check the logs of a container, you can use:

docker logs mynginx

To run a command inside a running container, you can use:

docker exec -it mynginx bash

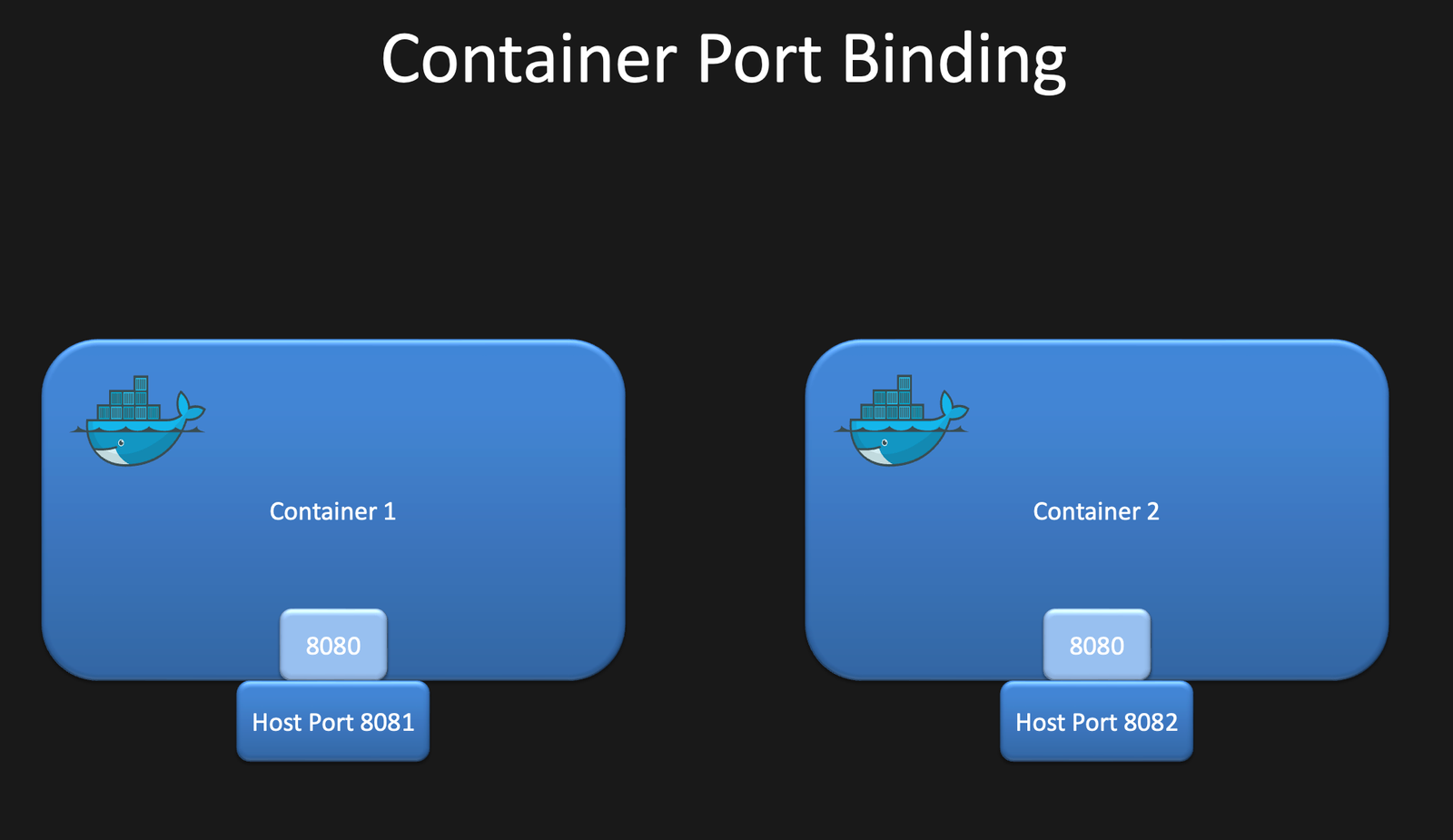

When running a container, you can map ports on the host machine to ports in the container. This allows you to access services running inside the container from outside.

When running a container, you can use the -p flag to map ports. For example, to map port 8081 on the host to port 80 in the container, you can use:

docker run -d -p 8081:80 nginx

This allows us to run multiple containers on the same host, each listening on different ports. For example, you can run two Nginx containers, each listening on a different port:

docker run -d -p 8081:80 nginx docker run -d -p 8082:80 nginx

Docker networking allows containers to communicate with each other and the outside world. By default, Docker creates a bridge network for containers to connect to. You can also create custom networks to isolate containers or connect them to specific networks.

You can list the available networks using:

docker network ls

To create a new network, you can use:

docker network create mynetwork

To connect a container to a specific network, you can use:

docker network connect mynetwork mycontainer

To connect to a network when running a container, you can use:

docker run --network mynetwork myimage

Docker volumes provide a way to persist data generated by containers. Volumes are stored outside the container's filesystem, which allows data to persist even if the container is stopped or deleted.

You can create a volume using:

docker volume create mysharedvolume

To list the available volumes, you can use:

docker volume ls

Now to mount a volume when running a container, you can use:

docker run -d --name container1 -v mysharedvolume:/data nginx

This mounts the mysharedvolume volume to the /data directory in the container.

To run multiple containers sharing the same local directory, you can use:

docker run -d --name container1 -v /home/user/local-data:/data nginx docker run -d --name container2 -v /home/user/local-data:/data nginx

To stop a running container, you can use:

docker stop mynginx

You can see the stopped containers using:

docker ps -a

To remove a container, you can use:

docker rm mynginx

To remove all stopped containers, you can use:

docker container prune

To remove an image, you can use:

docker rmi mynginx

Docker is a powerful tool that simplifies the development, shipping, and running of applications. By packaging applications with their dependencies into containers, Docker allows developers to build once and run anywhere. This guide covered the basics of Docker, including installation, running containers, networking, volumes, and managing containers. By mastering Docker, developers can streamline their development workflow and deploy applications with confidence.

To stay updated with the latest tutorials, follow us on LinkedIn and Medium.

Docker containers share the host OS kernel and are much lighter than VMs, which include a full operating system. Containers start faster and use fewer resources while still providing isolation.

While Docker runs on Linux, you don't need deep Linux knowledge to use Docker. Most Docker commands are straightforward, and you can run Docker on Windows and macOS using Docker Desktop.

Docker is for containerizing individual applications, while Kubernetes is for orchestrating multiple containers across multiple machines. Start with Docker for single applications, then consider Kubernetes for complex deployments.

Yes! Docker is widely used in production. Many companies use Docker containers in production environments, often with orchestration tools like Kubernetes or Docker Swarm.

Dockerfile defines how to build a single container image, while docker-compose defines and runs multi-container applications. Use Dockerfile for single services, docker-compose for complex applications with multiple services.

Learn how to create efficient integration tests for your Spring Boot APIs using Testcontainers and the Rest Assured library. This blog will guide you through building fluent integration tests for an API that interacts with MongoDB and AWS S3.

A comprehensive cheat sheet for Docker commands. Learn how to manage Docker images, containers, networks, volumes, and Docker Compose with this handy reference guide.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.