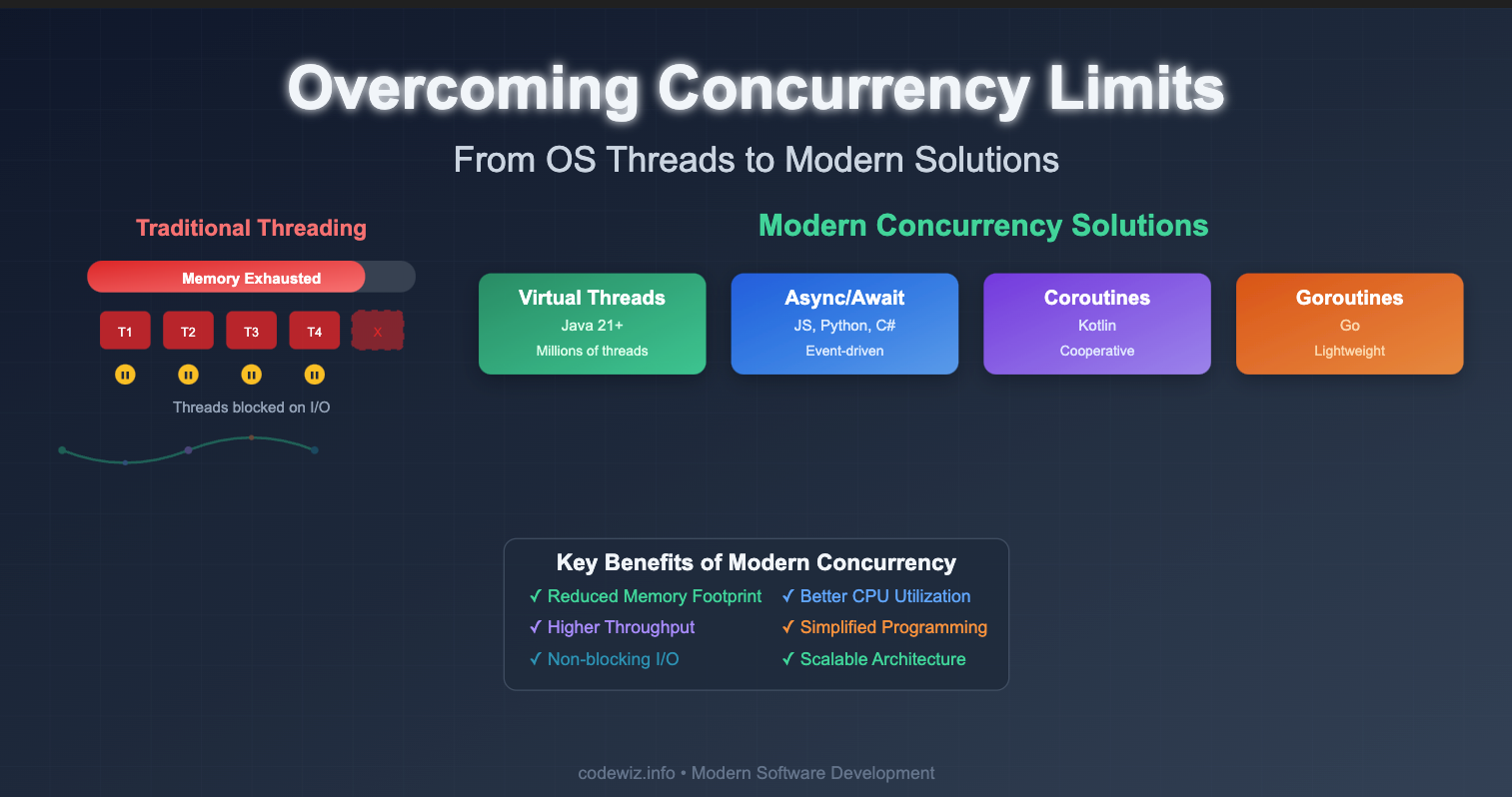

In a previous post Process vs Thread, we went through how we can use platform threads to improve performance through concurrent task execution. However, as modern applications demand increasingly higher levels of concurrency, traditional OS threads have significant limitations that can bottleneck scalability.

Imagine trying to serve 10,000 simultaneous users with traditional threading—you'd quickly exhaust system resources! This post explores different approaches that modern programming languages and frameworks provide to overcome these limitations: virtual threads, async/await, coroutines, and reactive programming.

Think of traditional threading like a restaurant where each table (request) must have a dedicated waiter (thread) standing by, even when customers are reading the menu or talking. Most of the time, waiters are idle, yet they're still consuming the restaurant's limited space and resources. When all waiters are occupied, new customers must wait outside, even though the kitchen (CPU) isn't busy!

OS threads are managed by the operating system and come with significant overhead:

In typical business applications, threads are frequently blocked waiting for:

This creates a paradox: while your CPU remains underutilized, you cannot create more threads to handle additional requests due to memory limitations.

In the thread-per-request model, each incoming request is assigned a dedicated thread. When these threads get blocked (often waiting for I/O), memory usage increases rapidly. Even though the CPU is mostly idle, the application eventually hits the OS thread/memory limit, preventing it from handling more requests.

Virtual threads represent a paradigm shift in Java concurrency. Introduced as a preview feature in Java 19 and finalized in Java 21, they're lightweight threads managed entirely by the JVM rather than the operating system.

Think of virtual threads like ride-sharing for OS threads. Instead of each passenger (virtual thread) needing their own car (OS thread), multiple passengers can share cars efficiently. When a passenger gets out to run an errand (I/O operation), the car becomes available for other passengers.

Key mechanisms:

// Simple Virtual Thread Creation public class VirtualThreadExample { public static void main(String[] args) throws InterruptedException { // Method 1: Using Thread.startVirtualThread() Thread vThread1 = Thread.startVirtualThread(() -> { System.out.println("Virtual thread 1: " + Thread.currentThread()); }); // Method 2: Using Thread.ofVirtual() Thread vThread2 = Thread.ofVirtual() .name("custom-virtual-thread") .start(() -> { System.out.println("Virtual thread 2: " + Thread.currentThread()); }); // Method 3: Using ExecutorService try (var executor = Executors.newVirtualThreadPerTaskExecutor()) { for (int i = 0; i < 10000; i++) { final int taskId = i; executor.submit(() -> { // Simulate I/O operation try { Thread.sleep(1000); System.out.println("Task " + taskId + " completed on " + Thread.currentThread()); } catch (InterruptedException e) { Thread.currentThread().interrupt(); } }); } } // ExecutorService auto-closes } }

To enable virtual thread in a Spring Boot application, just add the below property in application.properties:

spring.threads.virtual.enabled=true

Async/await represents a programming model that makes asynchronous code look and behave more like synchronous code, while still maintaining non-blocking execution. Think of it as a smart waiter who takes multiple orders simultaneously—when one table's order is cooking, they serve other tables instead of standing idle.

await: Execution pauses, but doesn't block the threadJavaScript (Node.js) Example

// Advanced async/await patterns in JavaScript const fs = require('fs').promises; const https = require('https'); // Sequential vs Parallel execution async function fetchUserData(userId) { console.log(`Fetching data for user ${userId}...`); // Sequential - takes ~3 seconds total const profile = await fetchUserProfile(userId); const preferences = await fetchUserPreferences(userId); const history = await fetchUserHistory(userId); return { profile, preferences, history }; } async function fetchUserDataParallel(userId) { console.log(`Fetching data for user ${userId} in parallel...`); // Parallel - takes ~1 second total (longest operation) const [profile, preferences, history] = await Promise.all([ fetchUserProfile(userId), // 1 second fetchUserPreferences(userId), // 0.5 seconds fetchUserHistory(userId) // 0.8 seconds ]); return { profile, preferences, history }; }

Python (asyncio)

import asyncio import time from typing import List async def fetch_data(user_id: int) -> dict: """Simulate async API call""" await asyncio.sleep(0.5) # Simulate network delay return {'user_id': user_id, 'data': f'User {user_id} data'} async def process_users_sequential(user_ids: List[int]) -> List[dict]: """Process users one by one (slow)""" results = [] for user_id in user_ids: result = await fetch_data(user_id) results.append(result) return results async def process_users_concurrent(user_ids: List[int]) -> List[dict]: """Process users concurrently (fast)""" tasks = [fetch_data(user_id) for user_id in user_ids] return await asyncio.gather(*tasks) async def main(): user_ids = [1, 2, 3, 4, 5] # Sequential processing start = time.time() await process_users_sequential(user_ids) sequential_time = time.time() - start # Concurrent processing start = time.time() await process_users_concurrent(user_ids) concurrent_time = time.time() - start print(f"Sequential: {sequential_time:.2f}s") print(f"Concurrent: {concurrent_time:.2f}s") print(f"Speedup: {sequential_time/concurrent_time:.1f}x") # Run the example asyncio.run(main())

C# (.NET)

using System; using System.Net.Http; using System.Threading.Tasks; public class AsyncExamples { private static readonly HttpClient httpClient = new HttpClient(); // Basic async/await pattern public async Task<string> GetUserAsync(int userId) { var response = await httpClient.GetAsync($"https://api.example.com/users/{userId}"); return await response.Content.ReadAsStringAsync(); } // Parallel execution public async Task<string[]> GetMultipleUsersAsync(int[] userIds) { var tasks = userIds.Select(id => GetUserAsync(id)); return await Task.WhenAll(tasks); } // With timeout public async Task<string> GetUserWithTimeoutAsync(int userId, int timeoutSeconds = 5) { using var cts = new CancellationTokenSource(TimeSpan.FromSeconds(timeoutSeconds)); return await GetUserAsync(userId).WaitAsync(cts.Token); } }

Coroutines in Kotlin represent a paradigm where functions can be suspended and resumed, allowing for cooperative multitasking. Unlike preemptive threading where the OS forcibly switches between threads, coroutines voluntarily yield control at specific suspension points.

Think of coroutines like a dance floor where dancers (coroutines) take turns using the space. When one dancer needs to step out briefly (suspension point), they politely yield the floor to others, then smoothly resume their dance when ready.

Key characteristics of Kotlin coroutines:

delay(), await())The scheduler efficiently manages execution by moving coroutines between active and suspended states. When a coroutine hits a suspension point (like waiting for network I/O), it yields the thread to other coroutines, maximizing resource utilization.

// Kotlin Coroutines Example import kotlinx.coroutines.* // Suspend functions can be paused and resumed suspend fun fetchUser(userId: Int): String { delay(500) // Non-blocking delay return "User $userId" } suspend fun fetchUserData(userId: Int): Map<String, String> { delay(300) return mapOf("email" to "user$userId@example.com") } fun main() = runBlocking { println("Starting coroutines") // Run tasks concurrently with async val userDeferred = async { fetchUser(123) } val dataDeferred = async { fetchUserData(123) } // Wait for both results val user = userDeferred.await() val userData = dataDeferred.await() println("$user: $userData") // Launch a separate coroutine val job = launch { println("Background work starting") delay(200) println("Background work complete") } job.join() // Wait for completion println("All done!") }

Go's concurrency model is built around goroutines, which are lightweight threads managed by the Go runtime. Goroutines allow developers to write concurrent code without dealing with low-level thread management. Goroutines are functions that can run concurrently with other functions. They are extremely lightweight, allowing you to spawn thousands of them without significant overhead. The Go runtime manages scheduling and execution, making it easy to write concurrent programs.

Go's Goroutine Model:

// Go Coroutines (Goroutines) Example package main import ( "fmt" "time" ) func process(id int) { fmt.Printf("Started goroutine %d\n", id) time.Sleep(100 * time.Millisecond) // Simulate some work fmt.Printf("Finished goroutine %d\n", id) } func main() { // Launch multiple goroutines for i := 1; i <= 5; i++ { go process(i) // Non-blocking, returns immediately } // Wait to see results (in production, use sync.WaitGroup) time.Sleep(200 * time.Millisecond) fmt.Println("All goroutines completed") }

Reactive programming treats data as streams of events that flow through your application. Instead of pulling data when you need it, you react to data as it arrives. Think of it like a newspaper subscription—instead of going to the store every day to check for new issues, the newspaper is delivered to you when it's available.

Reactive frameworks like RxJava and Spring Reactor excel at handling asynchronous, event-driven workloads with built-in backpressure management.

In reactive programming, data flows through streams where each stage processes events asynchronously:

onNext() for new data, onError() for failures, onComplete() for stream endrequest(n)The key advantage is that no thread ever blocks. When an operation needs to wait (like a database query), the thread is released to handle other work. When the result arrives, a callback is triggered to continue processing.

This architecture enables handling millions of concurrent streams with just a handful of threads, making it ideal for high-throughput, low-latency applications like real-time analytics, IoT data processing, and high-frequency trading systems.

// Spring WebFlux Reactive REST API Example @RestController public class UserController { private final UserRepository userRepository; public UserController(UserRepository userRepository) { this.userRepository = userRepository; } @GetMapping("/users") public Flux<User> getAllUsers() { return userRepository.findAll(); } @GetMapping("/users/{id}") public Mono<User> getUserById(@PathVariable String id) { return userRepository.findById(id) .switchIfEmpty(Mono.error(new UserNotFoundException(id))); } @PostMapping("/users") public Mono<User> createUser(@RequestBody User user) { return userRepository.save(user); } @GetMapping("/users/search") public Flux<User> searchUsers(@RequestParam String query) { return userRepository.findByNameContaining(query) .filter(user -> user.isActive()) .flatMap(user -> enrichUserData(user)) .timeout(Duration.ofSeconds(3)); } private Mono<User> enrichUserData(User user) { return Mono.just(user) .zipWith(fetchUserPreferences(user.getId())) .map(tuple -> { User u = tuple.getT1(); u.setPreferences(tuple.getT2()); return u; }); } private Mono<UserPreferences> fetchUserPreferences(String userId) { // Simulate fetching from another service return WebClient.create("https://preferences-service") .get() .uri("/preferences/{id}", userId) .retrieve() .bodyToMono(UserPreferences.class) .onErrorResume(e -> Mono.just(new UserPreferences())); } }

In this example, all database and network operations are non-blocking. The application can handle thousands of concurrent requests with a small number of threads. When one operation is waiting for I/O, the thread can be used to process other requests. Backpressure ensures that if a client is slow at consuming data, the server won't overwhelm it with more data than it can handle.

In this article, we explored four powerful concurrency models: Java Virtual Threads, Async Await, Kotlin Coroutines, and Go Goroutines. Each model has its strengths and is suited for different types of applications.

For more in-depth tutorials on Java, Spring, and modern software development, check out my content:

🔗 Blog: https://codewiz.info

🔗 LinkedIn: https://www.linkedin.com/in/code-wiz-740370302/

🔗 Medium: https://medium.com/@code.wizzard01

🔗 Github: https://github.com/CodeWizzard01

A comprehensive guide to understanding processes and threads, their differences, advantages, and when to use each. Includes practical examples with Java multithreading.

Learn parallel task execution in Java and Spring Boot using CompletableFuture, @Async, Virtual Threads, and Structured Concurrency for better performance.

Find the most popular YouTube creators in tech categories like AI, Java, JavaScript, Python, .NET, and developer conferences. Perfect for learning, inspiration, and staying updated with the best tech content.

Get instant AI-powered summaries of YouTube videos and websites. Save time while enhancing your learning experience.